Project Overview

Project Repository Developed a simple neural network framework in C++ as a learning exercise to enhance C++ skills and deepen understanding of neural network fundamentals.

Key Features

- Custom

Tensorclass for matrix operations - PyTorch-like modular architecture (

Linear,ReLU) - Implementation of forward and backward passes

- Basic optimization using SGD

Technical Highlights

Tensor Operations:

- Implemented a

Tensorclass for efficient matrix computations - Supported basic operations required for neural network computations

- Implemented a

Neural Network Modules:

- Created

LinearandReLUmodules mimicking PyTorch’storch.nn - Implemented a

Sequentialcontainer for easy model construction

- Created

Training Loop:

- Developed forward pass, loss computation, and backward propagation

- Implemented Stochastic Gradient Descent (SGD) optimizer

Example Usage

Tensor data({1, 2, 3, 4, 5, 6}, 2, 3);

Tensor target({2, -2}, 2, 1);

nn::Sequential seq;

seq.add(std::make_shared<nn::Linear>(3, 5));

seq.add(std::make_shared<nn::ReLU>());

seq.add(std::make_shared<nn::Linear>(5, 1));

auto optimizer = optim::SGD(seq.parameters(), 0.01);

for(int i=0; i<100; i++){

auto out = seq.forward(data);

auto [loss, loss_grad] = nn::functional::mse_loss(out, target);

seq.backward(loss_grad);

optimizer.step();

}

Challenges Overcome

- Implementing efficient matrix operations in C++

- Managing memory and optimizing performance

- Designing a modular and extensible architecture

Tools and Technologies

C++, Make, Git

Future Plans

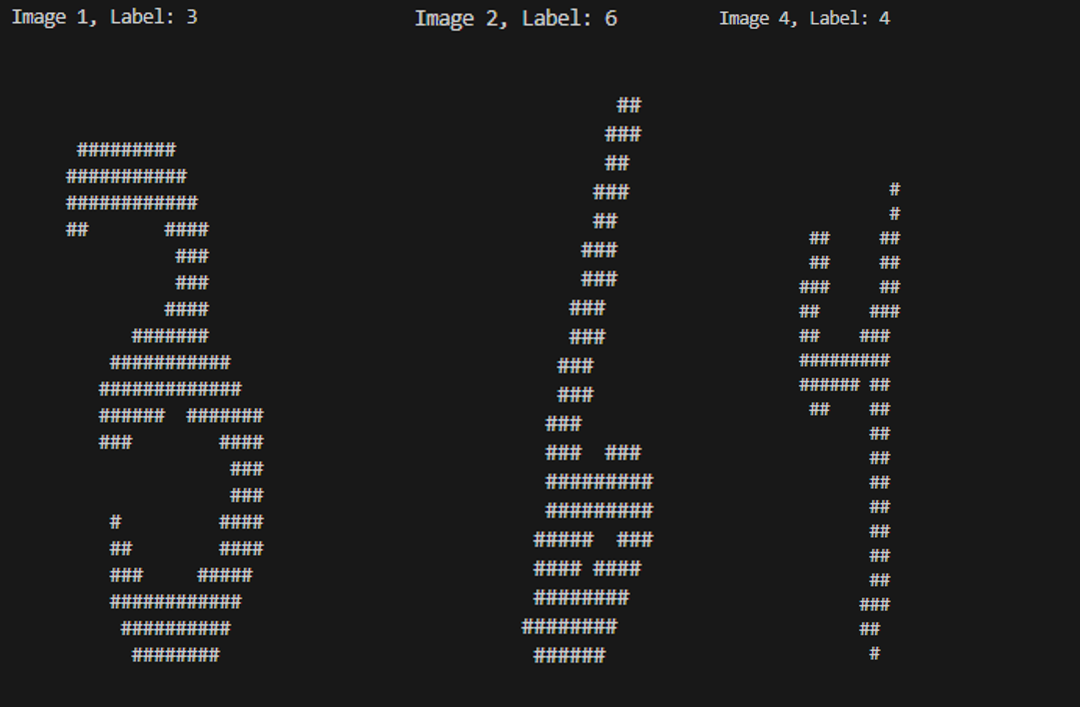

- Add dataloader functionality for MNIST dataset

- Implement additional layer types and activation functions

- Optimize performance for larger networks